Posts

Showing posts from February, 2014

Posted by

Unknown

Job opportunities at the MCMP

- Get link

- Other Apps

Posted by

Catarina

The deductive use of logic in mathematics (Part III of 'Axiomatizations of arithmetic...')

- Get link

- Other Apps

Posted by

Catarina

The descriptive use of logic in mathematics (Part II of 'Axiomatizations of arithmetic...')

- Get link

- Other Apps

Posted by

Catarina

Axiomatizations of arithmetic and the first-order/second-order divide

- Get link

- Other Apps

Posted by

Richard Pettigrew

CFP: Symposium on the Foundations of Mathematics, 7-8 July, Kurt Gödel Research Center, Vienna.

- Get link

- Other Apps

Posted by

Unknown

Summer School on PROBABILITIES IN PHYSICS (July 21-26, 2014)

- Get link

- Other Apps

Posted by

Richard Pettigrew

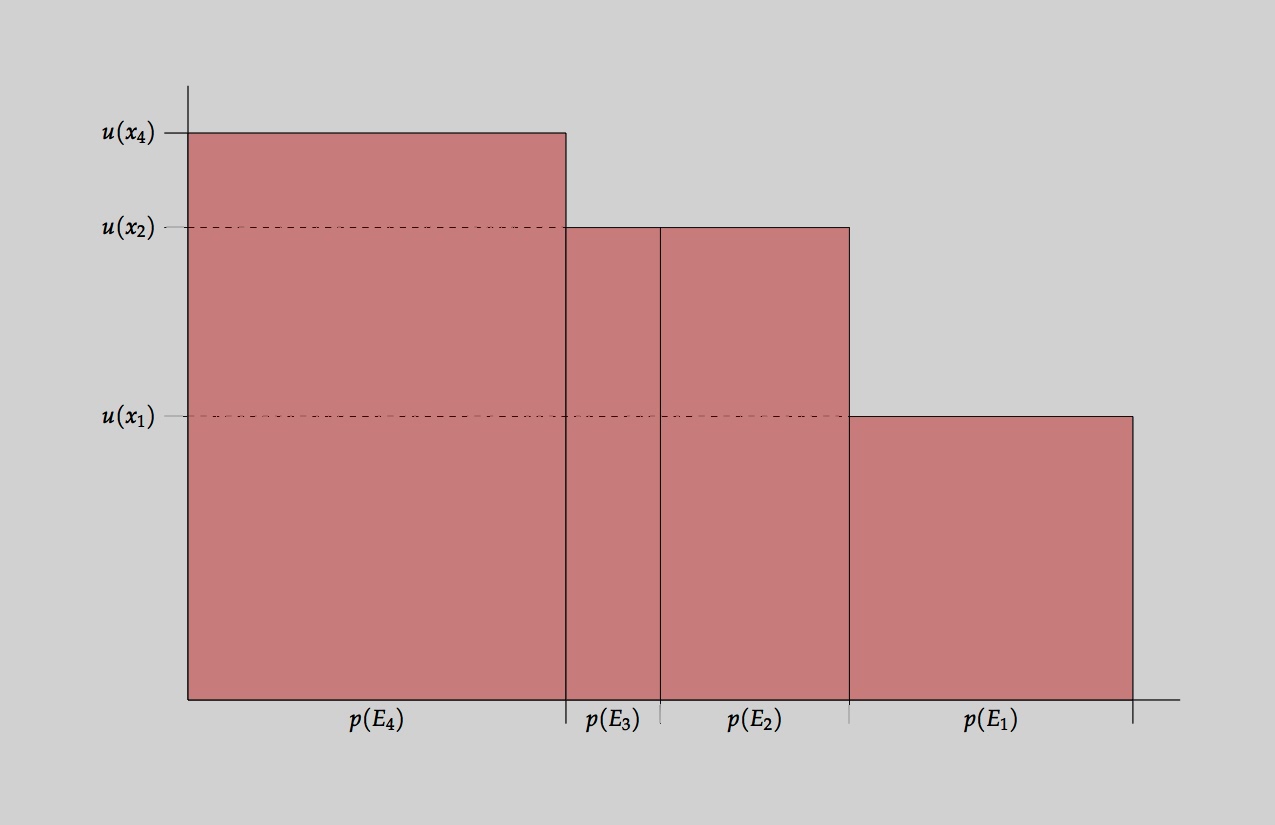

Buchak on risk and rationality

- Get link

- Other Apps